About Angara

Angara is a leading e-commerce platform specializing in fine gemstone jewelry. They combine traditional craftsmanship with modern technology to offer exquisite pieces sourced directly from mines. Each item can be personalized, ensuring a unique touch. With a commitment to transparency and customer satisfaction, Angara provides a seamless shopping experience, making it a trusted name in the industry.

Objective

Our collaboration with Angara was driven by a set of well-defined objectives aimed at enhancing various facets of their software development process.

- Reduce Manual Testing Efforts by introducing automation testing: Traditional manual testing processes were time-consuming and resource-intensive, leading to extended testing cycles and potential bottlenecks in the development pipeline.

Problem with the Current System

- Long Testing Cycles Due to Manual Testing:

- Relying solely on manual testing resulted in extended testing cycles, impacting development timelines and product quality.

- Manual testing processes consumed significant resources and time, leading to delays in feature delivery and potentially compromising product quality due to limited test coverage.

- No documentation of test cases: Initial testing processes lacked comprehensive documentation, leading to inefficiencies and knowledge gaps

Our Solution

- Collaboration for Test Case and Test Data Documentation:

- Recognizing the importance of clear and comprehensive test documentation, the team collaborated closely with QA, development, and product teams to document test cases and associated test data.

- Conducted workshops and meetings to gather requirements and specifications for test scenarios, ensuring alignment with business objectives and user expectations.

- Documented test cases in a structured format, including test objectives, preconditions, steps, expected outcomes, and acceptance criteria, making them accessible and understandable to all stakeholders.

Benefits of Collaborative Test Documentation:

- Improved Clarity and Understanding: Collaborative test documentation ensured clarity and alignment of testing objectives across development, QA, and product teams, reducing ambiguity and misinterpretation.

- Enhanced Test Coverage: Documenting test cases in collaboration with stakeholders enabled comprehensive test coverage, ensuring that all critical functionalities and user scenarios were addressed.

- Facilitated Automation: Clear and well-documented test cases provided a solid foundation for test automation, enabling efficient translation of manual test cases into automated scripts.

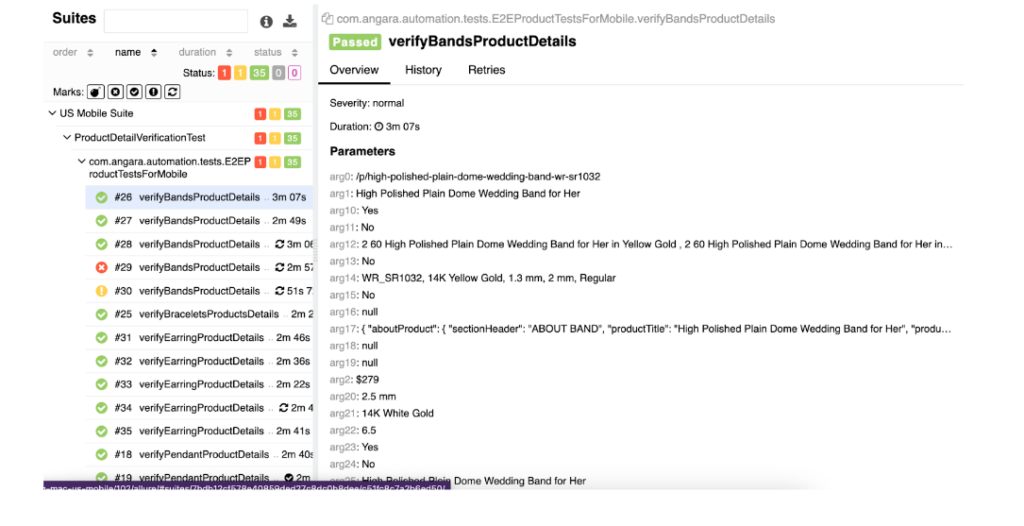

2. Development of a Data-Driven Framework:

- To manage the increasing complexity of tests and test data as our product portfolio expanded, we developed a robust data-driven testing framework.

- The data-driven framework enabled us to separate test scripts from test data, making it easy to manage and scale our test suites as the application’s test coverage grew.

- By parameterizing test data, we achieved flexibility in test execution, allowing us to run the same test scenario with different input values without modifying the test script.

3. Automation of Sanity/Regression Flows for Mobile and Web:

- We automated sanity and regression test flows for both mobile and web applications, targeting critical functionalities and user journeys.

- By automating these key test scenarios, we significantly reduced manual test cycle times, enabling faster feedback on application changes and reducing the risk of regression issues.

Benefits of Automation Implementation:

- Increased Test Coverage: Automation allowed us to expand test coverage by automating repetitive and time-consuming test scenarios, including sanity checks and regression tests.

- Reduced Manual Effort: Automation of test flows eliminated the need for manual execution of repetitive tests, freeing up QA resources to focus on exploratory and complex testing. Also, it gives them the flexibility to run automated tests as many times are required.

- Faster Feedback Loop: Automated tests provided rapid feedback on application changes, enabling early detection of defects and ensuring faster time-to-market for new features.

- Improved Reliability and Consistency: Automation ensured consistent test execution, reducing the likelihood of human errors and improving overall test reliability.

4. Visual Verification Automation:

Sikuli and OpenCV Integration

Implemented Sikuli and OpenCV to automate UI testing with visual verification:

Sikuli Integration:

- Leveraged OpenCV (Open Source Computer Vision Library) to perform visual verification during UI testing.

- Implemented image comparison techniques using OpenCV to validate UI elements against expected visual states or patterns, ensuring accuracy and reliability in UI testing.

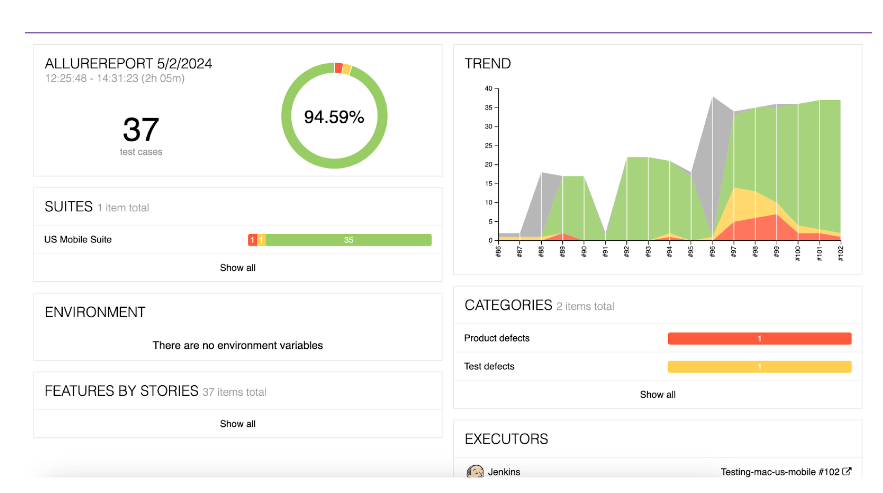

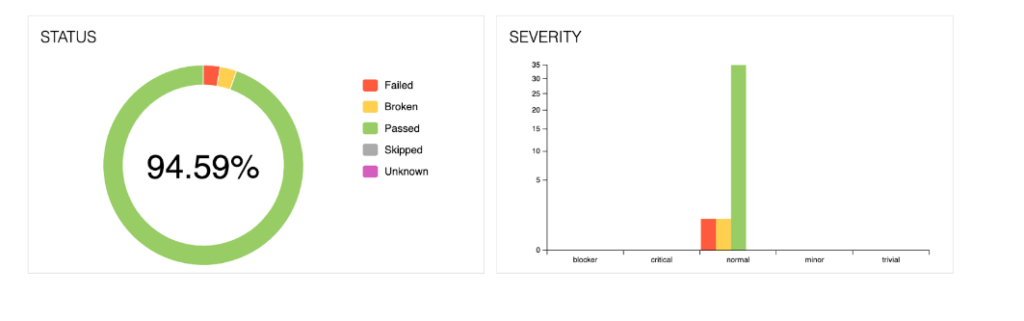

Outcomes

Improved Testing Efficiency

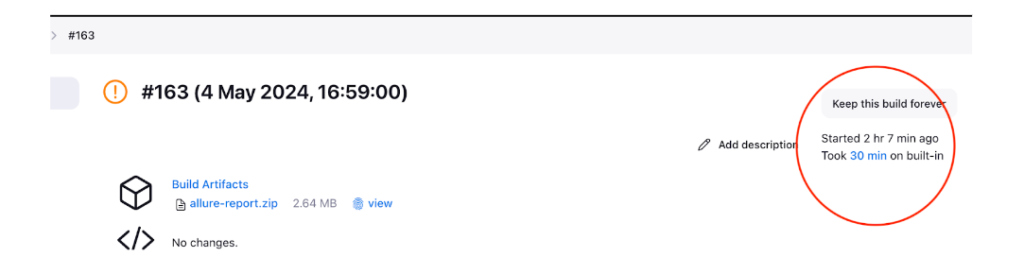

- Reduced web application testing cycle from 5 hours to 30 minutes

- Reduced mobile application testing cycle from 5 hours to 2 hours running automation on 1 mobile.

- Automation helped reduce manual effort and accelerate test execution, allowing testers to focus on more complex and exploratory testing.

What’s Next

- Integrate automated tests into our Continuous Integration (CI) pipeline, ensuring that they are executed automatically with each code change, providing timely validation of application stability.

- Setting up an in-house device lab to ensure smooth and cost-effective automation runs for mobile applications.

- We are in the process of reducing the mobile application testing cycle to 30 minutes by running tests in parallel on multiple mobile devices using the device lab.

Trust and Worth

Our Clients